Bedankt voor het vertrouwen het afgelopen jaar! Om jou te bedanken bieden we GRATIS verzending (in België) aan op alles gedurende de hele maand januari.

- Afhalen na 1 uur in een winkel met voorraad

- In januari gratis thuislevering in België

- Ruim aanbod met 7 miljoen producten

Bedankt voor het vertrouwen het afgelopen jaar! Om jou te bedanken bieden we GRATIS verzending (in België) aan op alles gedurende de hele maand januari.

- Afhalen na 1 uur in een winkel met voorraad

- In januari gratis thuislevering in België

- Ruim aanbod met 7 miljoen producten

Zoeken

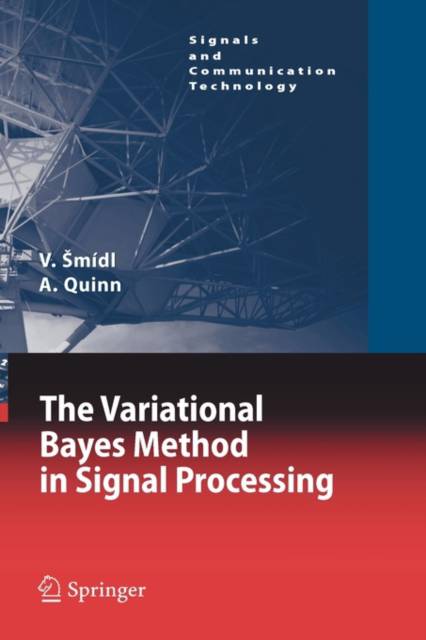

€ 167,95

+ 335 punten

Uitvoering

Omschrijving

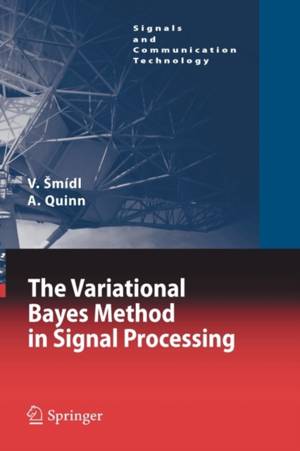

Gaussian linear modelling cannot address current signal processing demands. In moderncontexts, suchasIndependentComponentAnalysis(ICA), progresshasbeen made speci?cally by imposing non-Gaussian and/or non-linear assumptions. Hence, standard Wiener and Kalman theories no longer enjoy their traditional hegemony in the ?eld, revealing the standard computational engines for these problems. In their place, diverse principles have been explored, leading to a consequent diversity in the implied computational algorithms. The traditional on-line and data-intensive pre- cupations of signal processing continue to demand that these algorithms be tractable. Increasingly, full probability modelling (the so-called Bayesian approach)-or partial probability modelling using the likelihood function-is the pathway for - sign of these algorithms. However, the results are often intractable, and so the area of distributional approximation is of increasing relevance in signal processing. The Expectation-Maximization (EM) algorithm and Laplace approximation, for ex- ple, are standard approaches to handling dif?cult models, but these approximations (certainty equivalence, and Gaussian, respectively) are often too drastic to handle the high-dimensional, multi-modal and/or strongly correlated problems that are - countered. Since the 1990s, stochastic simulation methods have come to dominate Bayesian signal processing. Markov Chain Monte Carlo (MCMC) sampling, and - lated methods, are appreciated for their ability to simulate possibly high-dimensional distributions to arbitrary levels of accuracy. More recently, the particle ?ltering - proach has addressed on-line stochastic simulation. Nevertheless, the wider acce- ability of these methods-and, to some extent, Bayesian signal processing itself- has been undermined by the large computational demands they typically make.

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 228

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9783540288190

- Verschijningsdatum:

- 29/11/2005

- Uitvoering:

- Hardcover

- Formaat:

- Ongenaaid / garenloos gebonden

- Afmetingen:

- 162 mm x 240 mm

- Gewicht:

- 494 g

Alleen bij Standaard Boekhandel

+ 335 punten op je klantenkaart van Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.