- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

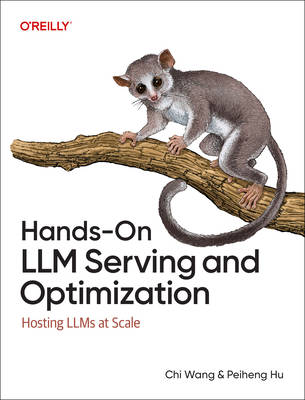

Large language models (LLMs) are rapidly becoming the backbone of AI-driven applications. Without proper optimization, however, LLMs can be expensive to run, slow to serve, and prone to performance bottlenecks. As the demand for real-time AI applications grows, along comes Hands-On Serving and Optimizing LLM Models, a comprehensive guide to the complexities of deploying and optimizing LLMs at scale.

In this hands-on book, authors Chi Wang and Peiheng Hu take a real-world approach backed by practical examples and code, and assemble essential strategies for designing robust infrastructures that are equal to the demands of modern AI applications. Whether you're building high-performance AI systems or looking to enhance your knowledge of LLM optimization, this indispensable book will serve as a pillar of your success.

- Learn the key principles for designing a model-serving system tailored to popular business scenarios

- Understand the common challenges of hosting LLMs at scale while minimizing costs

- Pick up practical techniques for optimizing LLM serving performance

- Build a model-serving system that meets specific business requirements

- Improve LLM serving throughput and reduce latency

- Host LLMs in a cost-effective manner, balancing performance and resource efficiency

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 300

- Taal:

- Engels

Eigenschappen

- Productcode (EAN):

- 9798341621497

- Verschijningsdatum:

- 2/06/2026

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 178 mm x 233 mm

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.