- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

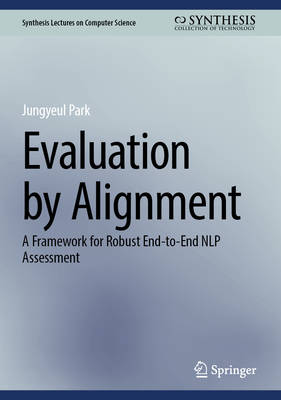

This book presents a novel, alignment-based evaluation framework that tackles a persistent challenge in natural language processing (NLP): how to fairly and accurately evaluate systems when preprocessing steps such as tokenization and sentence boundary detection (SBD) misalign between gold-standard and system outputs. By introducing the jointly preprocessed evaluation algorithm (jp-algorithm), this book proposes a solution that brings precision and flexibility to the assessment of modern, end-to-end NLP systems. Traditional evaluation methods assume identical sentence and token boundaries between references and hypotheses, making them poorly suited to real-world data and increasingly common end-to-end architectures. The jp-algorithm addresses these shortcomings by introducing a linear-time alignment strategy inspired by techniques in machine translation. This method allows for robust comparisons even when input segmentation differs, enabling reliable evaluation in tasks such as preprocessing, constituency parsing, and grammatical error correction (GEC). The book explores how misaligned preprocessing impacts standard evaluation metrics including PARSEVAL for constituency parsing and F0.5 for GEC and provides empirical solutions for preserving evaluation accuracy without sacrificing methodological integrity. By offering detailed case studies, formal algorithmic descriptions, and practical implementations, this book equips researchers, tool developers, and instructors with a generalizable framework for improving NLP evaluation practices. This book is intended for researchers, graduate students, and professionals working in NLP, corpus linguistics, and computational linguistics.

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 129

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9783032165626

- Verschijningsdatum:

- 16/04/2026

- Uitvoering:

- Hardcover

- Formaat:

- Genaaid

- Afmetingen:

- 168 mm x 240 mm

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.